Machine Learning (ML)

Machine learning is another rapidly growing field within artificial intelligence that involves creating algorithms and models that allow computers to learn from data, recognize patterns, and make decisions without being explicitly programmed.

There are three main types of machine learning: supervised, unsupervised, and reinforcement learning.

1. Supervised learning uses labelled data to train models for making predictions.

2. Unsupervised learning identifies patterns and relationships in unlabelled data.

3. Reinforcement learning trains an agent to make decisions based on feedback.

Common supervised learning algorithms include linear regression, logistic regression, and decision trees, while unsupervised learning uses algorithms such as k-means clustering, PCA, and auto encoders.

Machine learning has many applications, including speech recognition, natural language processing, and image recognition, with endless possibilities for innovation and progress.

1. Supervised Learning Algorithms

Supervised learning algorithms are trained on datasets where each example is paired with a target or response variable, known as the label. The goal is to learn a mapping function from input data to the corresponding output labels, enabling the model to make accurate predictions on unseen data.

2. Unsupervised Learning Algorithms

Unsupervised learning algorithms works with unlabelled data to discover hidden patterns or structures without predefined outputs. These are again divided into three main categories based on their purpose: Clustering, Association Rule Mining, and Dimensionality Reduction. First we’ll see algorithms for Clustering, then dimensionality reduction and at last association.

3. Reinforcement Learning Algorithms

Reinforcement learning involves training agents to make a sequence of decisions by rewarding them for good actions and penalizing them for bad ones. Broadly categorized into Model-Based and Model-Free methods, these approaches differ in how they interact with the environment.

Linear Regression

Linear regression is used to predict a continuous value by finding the best-fit straight line between input (independent variable) and output (dependent variable). Minimises the difference between actual values and predicted values using a method called “least squares” to best fit the data. Predicting a person’s weight based on their height or predicting house prices based on size.

Logistic Regression

Logistic regression predicts probabilities and assigns data points to binary classes (e.g., spam or not spam). It uses a logistic function (S-shaped curve) to model the relationship between input features and class probabilities. Used for classification tasks (binary or multi-class). Outputs probabilities to classify data into categories. Example: Predicting whether a customer will buy a product online (yes/no) or diagnosing if a person has a disease (sick/not sick).

Decision Trees

A decision tree splits data into branches based on feature values, creating a tree-like structure. Each decision node represents a feature; leaf nodes provide the final prediction. The process continues until a final prediction is made at the leaf nodes. Works for both classification and regression tasks.

Support Vector Machines (SVM)

SVMs find the best boundary (called a hyperplane) that separates data points into different classes. Uses support vectors (critical data points) to define the hyperplane. Can handle linear and non-linear problems using kernel functions focuses on maximizing the margin between classes, making it robust for high-dimensional data or complex patterns.

Neural Networks

A neural network, or artificial neural network, is a type of computing architecture that is based on a model of how a human brain functions, hence the name “neural.” Neural networks are made up of a collection of processing units called “nodes.” These nodes pass data to each other, just like how in a brain, neurons pass electrical impulses to each other.

Neural networks are used in machine learning, which refers to a category of computer programs that learn without definite instructions. Specifically, neural networks are used in deep learning, an advanced type of machine learning that can draw conclusions from unlabelled data without human intervention. For instance, a deep learning model built on a neural network and fed sufficient training data could be able to identify items in a photo it has never seen before.

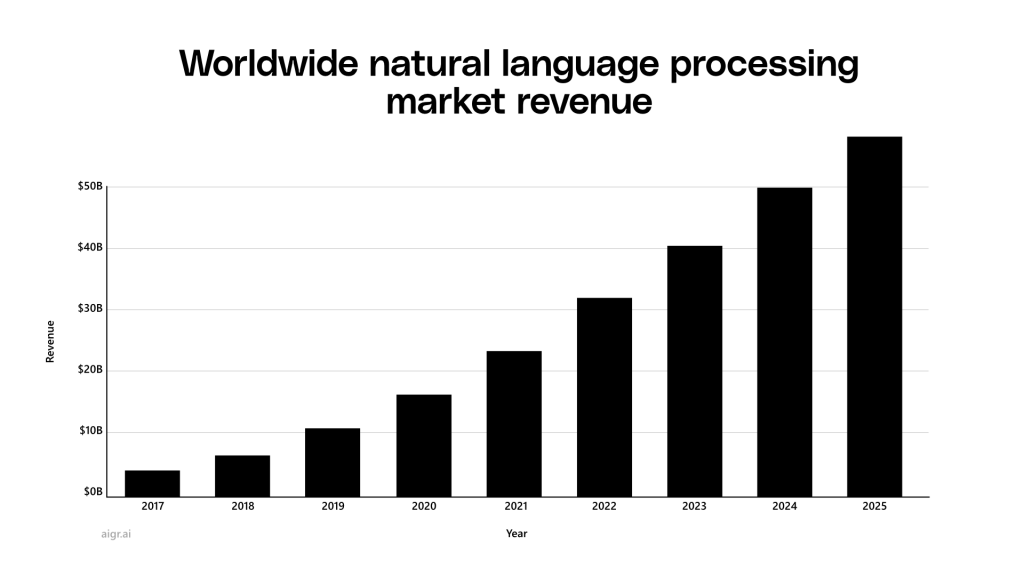

Machine Learning Market Set to Surge 440% by 2031

While the entire AI industry has experienced explosive growth in recent years, the rise of machine learning has been nothing short of extraordinary, and that momentum shows no signs of slowing. This technology has transformed industries from finance to healthcare and continues to attract record investments, showing investors believe in its long-term potential. In Q1 2025 alone, companies and startups working in the machine learning space raised a mind-blowing $54.8 billion, the highest quarterly figure in market history. With the surge of VC investments, machine learning continues its meteoric growth, far outpacing other AI sectors and the broader market.

According to forecasts, the machine learning industry is expected to reach $105.4 billion in 2025, a $30 billion increase in just one year. But that’s only the beginning. The market is projected to skyrocket by nearly 440%, hitting an impressive $568 billion by 2031. This triple-digit growth is even more impressive when compared to other AI sectors. Statistics show machine learning will grow 40% faster than the industry average of 331% by 2031. Natural language processing will trail even more with a 277% increase, or 58% lower than machine learning’s growth. AI robotics follows with a 316% jump, reflecting a 38% gap. Meanwhile, computer vision and autonomous sensor technologies lag far behind. Statistics show computer vision will grow 200% slower than machine learning, while autonomous and sensor technology is expected to fall behind by a staggering 300%, highlighting the huge difference in growth rates across AI’s main sectors.

Machine learning for Healthcare

The healthcare industry has witnessed significant advancements in ML applications, particularly in medical diagnosis, pandemic monitoring, and imaging diagnostics. ML-based medical diagnosis has the potential to transform patient care, and early identification of potential pandemics can save lives. Additionally, ML algorithms have demonstrated outstanding accuracy in imaging diagnostics, such as radiology and pathology.

The global AI in healthcare market was valued at $11.06 billion in 2021 and is projected to reach $187.95 billion by 2030 (Precedence Research, Statista). Clinical trials, a crucial aspect of healthcare research, accounted for over 24.2% of the revenue generated by the AI in healthcare market in 2021 (Precedence Research).

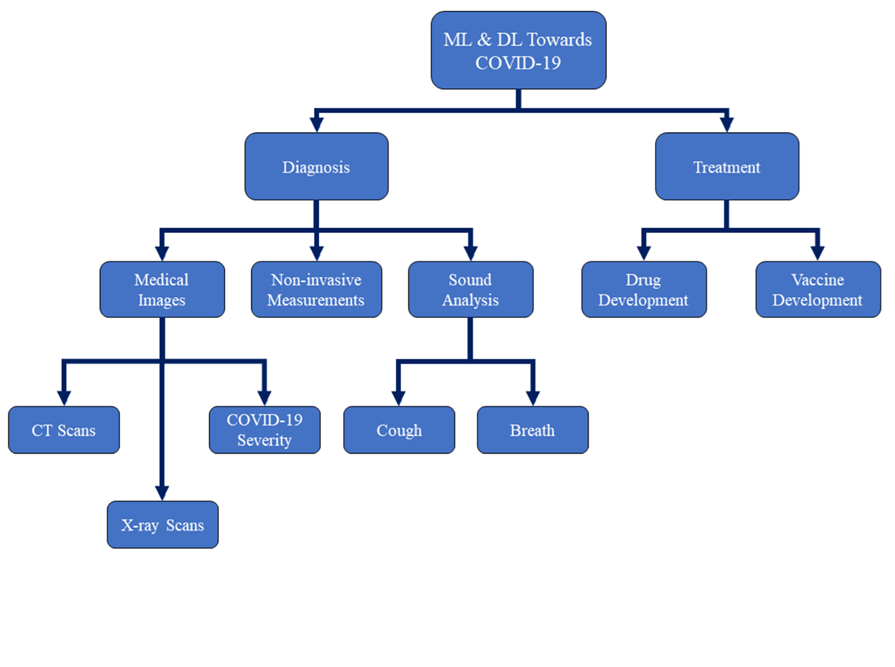

The Covid-19 Pandemic

The COVID-19 outbreak had rapidly accelerated the Machine Learning market growth, and this trend is expected to continue during the assessment period as well. This is due to the rising adoption of ML technology in automotive, retail, healthcare, and other sectors. The application of AI technology helped researchers combat the COVID-19 pandemic. For instance, according to the Brookings Institution, researchers in South Korea used geolocation data and surveillance camera footage to track coronavirus patients. The data scientists used this footage and machine intelligence algorithms to predict the location of the next outbreak and inform the responsible authorities and track COVID-19 positive patients in real-time. Such active initiatives may surge the demand for machine learning solutions over the coming years.

Machine learning for Manufacturing

In the manufacturing industry, machine learning can optimize manufacturing processes, supply chain management, and on-demand production. ML algorithms can auto-correct manufacturing processes, leading to improved efficiency and reduced costs. With the adoption of ML and AI, manufacturers can transform their operations and gain a competitive edge.

The AI in manufacturing market was estimated to be valued at $2.3 billion in 2022 and projected to reach $16.3 billion by 2027 (Markets and Markets). Despite the potential benefits, only 9% of manufacturing companies state that their ML and AI projects have met their expectations in terms of benefits, budget, and time invested (Deloitte).

Benefits of AI in Manufacturing

Manufacturers have high AI expectations. According to a recent report, at least 70% of manufacturers said their use of AI would highly or moderately benefit 31 areas related to business performance, production operations. However, only 22% had a specific set of metrics in place to measure AI’s effectiveness. By understanding the nuances of the eight benefits below, manufacturing leaders can better track AI’s impact.

- Improved maintenance and operations: By collecting and analysing data about equipment performance, AI, along with ML, can help businesses schedule maintenance and repairs before anything breaks or fails. This predictive approach minimises unexpected downtime, lowers repair costs and extends machinery life.

- Enhanced quality and precision: AI-driven quality-control systems analyse goods and compare results to established standards. This helps manufacturers eliminate product defects before goods are mass-produced, preventing the possibility of costly recalls or potential liabilities. Another benefit: These systems can be easily and quickly adjusted if designs change or to accommodate order customisation.

- Efficient production and automation: AI uses automation to spot problem areas for improvement so that production workflows become more efficient. And workers are freed to tackle hands-on problem-solving and strategic planning.

- Supply chain and inventory management: AI presents manufacturers with real-time insights into their supply chains and inventory levels. This data, often combined with demand forecasts, helps managers align supply with demand to minimise financial losses associated with under- or overstocking.

- Advanced product development and design: Product designers can use AI and AR technology to create virtual models of products and test alternative versions, without spending the time and resources otherwise required to physically produce goods. These so-called “digital twins” aid in remote troubleshooting, maintenance and performance improvements.

- Cost efficiency and business value: AI-driven data analytics increase manufacturers’ visibility into operations. This helps them see what is and isn’t working, leading to enhancements that lower costs, increase output and shorten lead times — all of which helps their businesses become more competitive and profitable.

- Safety and compliance: Collaborative robots, aka cobots, are AI-driven tools that work alongside real-life workers and, among their applications, can handle dangerous tasks that might lead to possible human injury, such as pouring molten metal on an assembly line. Cobots also automate manual, repetitive or error-prone tasks, which can increase the safety of workers who might absentmindedly lose focus. Additionally, managers can use AI to monitor work environments to detect unsafe conditions or areas of regulatory noncompliance.

- Innovation and competitive edge: AI and ML can evolve alongside businesses as they scale operations, especially through integration with other sophisticated technologies, such as IoT devices and smart sensors. Even during transitional growth periods, AI can effectively analyse additional aspects of production and provide actionable recommendations, so manufacturers derive the insight they need to innovate, adapt, and gain or maintain a competitive advantage.