What Is AI Governance?

AI governance encompasses the frameworks, policies and practices that promote the responsible, ethical and safe development and use of AI systems. Boards will collaborate with key technology and risk stakeholders to set guidelines for transparency, accountability and fairness in AI technologies to prevent harm and bias while maximizing their benefits operationally and strategically.

Responsible AI governance considers the following:

Security and privacy: Chief technology officers, risk officers, chief legal officers and their boards must develop a governance approach that protects data, prevents unauthorized access and ensures AI systems don’t become a cybersecurity threat.

Ethical standards: AI governance policies should promote human centric and trustworthy AI and ensure a high level of protection of health, safety and fundamental human rights.

Security and privacy: Boards should also consider compliance with applicable legal frameworks that govern AI usage where they operate, or intend to operate, such as the EU’s AI Act.

Accountability and oversight: Organizations should assign responsibility for AI decisions to ensure human oversight and prevent misuse.

Understanding AI Governance

AI governance is the nucleus of responsible and ethical artificial intelligence implementation within enterprises. Encompassing principles, practices, and protocols, it guides the development, deployment, and use of AI systems. Effective AI governance promotes fairness, ensures data privacy, and enables organizations to mitigate risks. The importance of AI governance can’t be overstated, as it serves to safeguard against potential misuse of AI, protect stakeholders’ interests, and foster user trust in AI-driven solutions.

Key Components of AI Governance

Ethical guidelines outlining the moral principles and values that guide AI development and deployment form the foundation of AI governance. These guidelines typically address issues such as fairness, transparency, privacy, and human-centricity. Organizations must establish clear ethical standards that align with their corporate values, as well as society’s expectations.

Regulatory frameworks play a central role in AI governance by ensuring compliance with relevant laws and industry standards. As AI technologies continue to advance, governments and regulatory bodies develop new regulations to address emerging challenges. Enterprises must stay abreast of these evolving requirements and incorporate them into their governance structures.

Accountability mechanisms are essential for maintaining responsibility throughout the AI development lifecycle. These mechanisms include clear lines of authority, decision-making processes, and audit trails. By establishing accountability, organizations can trace AI-related decisions and actions back to individuals or teams, ensuring proper oversight and responsibility.

AI governance addresses transparency, ensuring that AI systems and their decision-making processes are understandable to stakeholders. Organizations should strive to explain how their LLM’s work, what data they use, and how they arrive at their outcomes. Transparency allows for meaningful scrutiny of AI systems.

Risk management forms a critical component of AI governance, as it involves identifying, assessing, and mitigating potential risks associated with AI implementation. Organizations must develop risk management frameworks that address technical, operational, reputational, and ethical risks inherent in AI systems.

Why is AI governance important?

Corporate governance more broadly arose to balance the interests of all key stakeholders — leadership, employees, customers, investors and more — fairly, transparently and for the company’s good. AI governance is similarly important because it prioritizes ethics and safety in developing and deploying AI. “The corporate governance implications of AI are becoming increasingly understood by boards, but there is still room for improvement,” says Jo McMaster, Regional Vice President of Sales at Diligent. Without good governance economic and social disruptions.

The Importance of Having a strong AI Governance Approach

Prevents bias: AI models can inherit biases from training data, leading to unfair hiring, lending, policing and healthcare outcomes. Governance proactively identifies and mitigates these biases.

Prioritizes accountability: When AI makes decisions, who is responsible? Governance holds humans accountable for AI-driven actions, preventing harm from automated decision making. PwC’s Head of AI Public Policy and Ethics Maria Axente says, “We need to be thinking, ‘What AI do we have in the house, who owns it and who’s ultimately accountable?’”

Protects privacy and security: AI relies on vast amounts of data, a particular risk for healthcare and financial organizations handling sensitive information. Governance establishes guidelines for data protection, encryption and ethical use of personal information.

Prepares for AI’s) Environmental, Social and Governance (ESG) impact: Generative AI has a significant environmental impact requiring massive amounts of electricity and water for every query. It also reshaped job markets and corporate operations. Governance helps create policies that balance AI’s opportunities with its ESG risks.

Promotes transparency and trust: Many AI systems are considered “black boxes” with little insight into their decision-making. Governance encourages transparency and helps users trust and interpret AI outcomes.

Balances innovation and risk: While AI holds immense potential for progress in healthcare, finance and education, governance weighs innovation alongside possible ethical considerations and public harm.

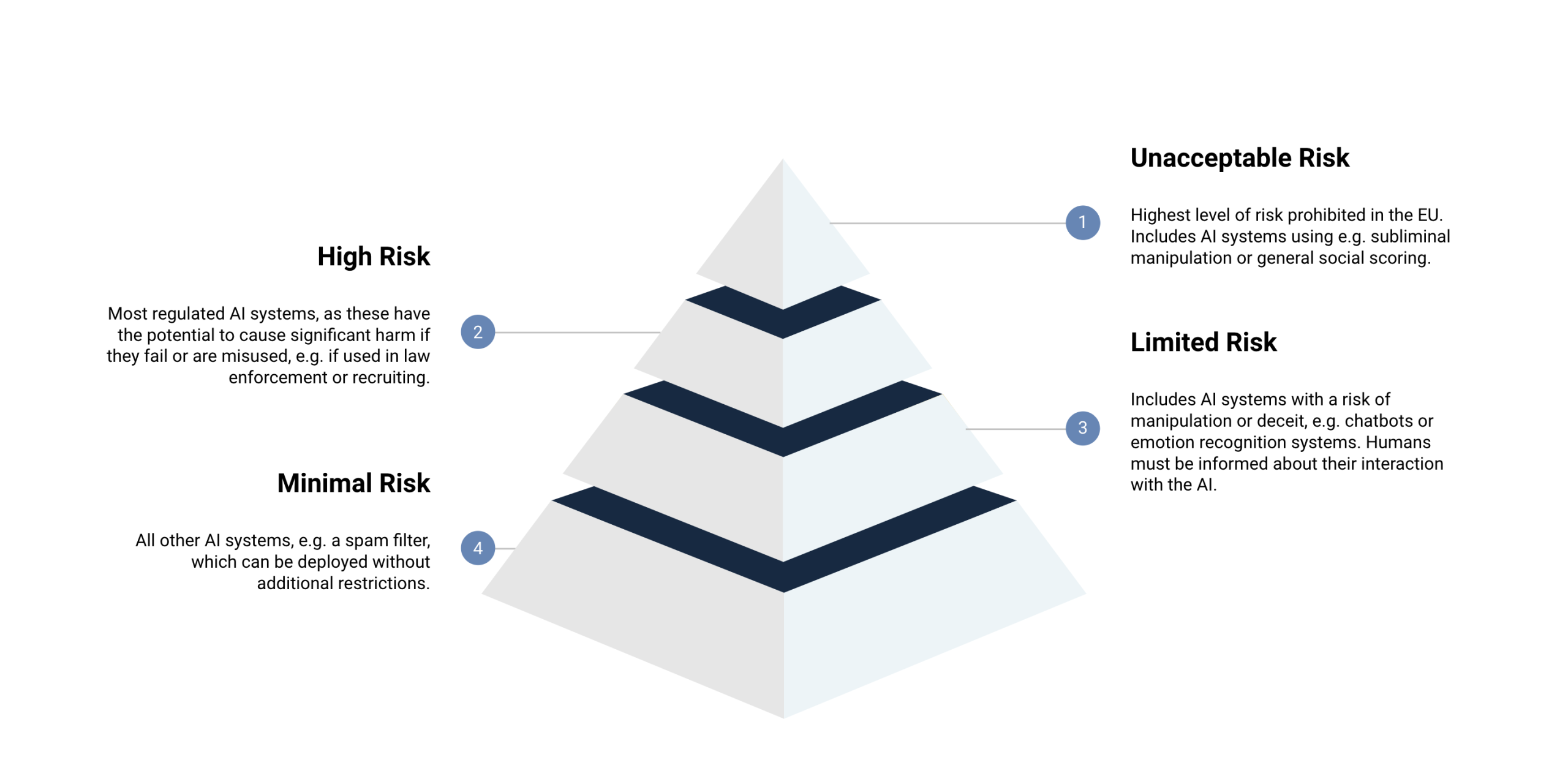

Risk Model Breakdown Structure

Unacceptable risk is the highest level of risk. This tier can be divided into eight (initially four) AI application types that are incompatible with EU values and fundamental rights. These are applications related to:

Subliminal manipulation: changing a person’s behaviour without them being aware of it, which would harm a person in any way. An example could be a system that influences people to vote for a particular political party without their knowledge or consent.

Exploitation of the vulnerabilities of persons resulting in harmful behaviour: this includes social or economic situation, age and physical or mental ability. For instance, a toy with voice assistants that may animate children to do dangerous things.

Biometric categorization of persons based on sensitive characteristics: this includes gender, ethnicity, political orientation, religion, sexual orientation and philosophical beliefs.

General purpose social scoring: using AI systems to rate individuals based on their personal characteristics, social behaviour and activities, such as online purchases or social media interactions. The concern is that, for example, someone could be denied a job or a loan simply because of their social score that was derived from their shopping behaviour or social media interactions, which might be unjustified or unrelated.

Real-time remote biometric identification (in public spaces): biometric identification systems will be completely banned, including ex-post identification. Exceptions can be made for law enforcement with judicial approval and the Commission’s supervisory. This is only possible for the pre-defined purposes of targeted search of crime victims, terrorism prevention and targeted search of serious criminals or suspects (e.g. trafficking, sexual exploitation, armed robbery, environmental crime).

Assessing the emotional state of a person: this holds for AI systems at the workplace or in education. Emotion recognition may be allowed as high-risk application, if they have a safety purpose (e.g. detect if a driver falls asleep).

Predictive policing: assessing the risk of persons for committing a future crime based on personal traits.

Scraping facial images: creating or expanding databases with untargeted scraping of facial images available on the internet or from video surveillance footage.

AI systems related to these areas will be prohibited in the EU.

High-risk AI systems will be the most regulated systems allowed in the EU market. In essence, this level includes safety components of already regulated products and stand-alone AI systems in specific areas (see below), which could negatively affect the health and safety of people, their fundamental rights or the environment. These AI systems can potentially cause significant harm if they fail or are misused. We will detail what classifies as high-risk in the next section.

The third level of risk is limited risk, which includes AI systems with a risk of manipulation or deceit. AI systems falling under this category must be transparent, meaning humans must be informed about their interaction with the AI (unless this is obvious), and any deep fakes should be denoted as such. For example, chatbots classify as limited risk. This is especially relevant for generative AI systems and its content.

The lowest level of risk described by the EU AI Act is minimal risk. This level includes all other AI systems that do not fall under the above-mentioned categories, such as a spam filter. AI systems under minimal risk do not have any restrictions or mandatory obligations. However, it is suggested to follow general principles such as human oversight, non-discrimination, and fairness.

Major AI governance frameworks

Just like the European Union has the General Data Protection Regulation (GDPR), but the U.S. does not, AI governance frameworks vary by region. Countries often take a different approach to what it means for AI to be ethical, safe and responsible.

“The issue of competing values is not a new one for governments or the technology sector.,” says Waterman. “During a time of regulatory uncertainty and ambiguity, where laws will lag behind technology, we need to find a balance between good governance and innovation to anchor our decision-making in ethical principles that will stand the test of time when we look back in the mirror in the years ahead.” Global AI regulations currently lack harmonization. For instance, certain countries like the United States and UK currently emphasize guidelines and focus on innovation and maintaining a competitive edge on the global stage. In contrast, the EU’s AI Act is a comprehensive law that places a greater emphasis on assessing and mitigating the risks posed by AI to foster trustworthy AI and ensure the protection of fundamental human rights.

Some significant frameworks around the world include:

1. United Kingdom: In 2023, the UK published an AI regulation white Rather than instituting a single law, the UK took a pro-innovation and sector-based approach to AI. The document encourages the self-regulation of ethical AI practices in industry, focusing significantly on safety, transparency and accountability in AI development.

2. European Union: The EU AI Act was passed in 2024 and classified AIU systems into risk categories based on the industry and how AI is developed and deployed. All AI applications under the act are subject to transparency, accountability and data protection requirements.

3. United states: The US relies on existing federal laws and guidelines to regulate AI but aims to introduce AI legislation and a federal regulation authority. Until then, developers and deployers of AI systems will operate in an increasing patchwork of state and local laws, underscoring challenges to ensure compliance.

4. China: The New Generation Artificial Intelligence Plan is one of the most detailed AI regulatory systems. It includes strict AI controls, safety standards and facial recognition regulations. China also implemented the Interim Measures for AI Services in 2023 to ensure AI-generated content aligns with Chinese social values.

5. India: Developed by think tank NIT Aayog, India’s National Strategy for Artificial Intelligence focuses on the ethical adoption of AI in sensitive industries like healthcare, agriculture and finance. It proposes self-regulation and public-private partnerships for AI governance.

Timelines

February 2025 – the ban on prohibited AI will come into effect.

May 2025 – codes of practice for non-high risk AI systems come into effect.

August 2025 – requirements for GPAI systems come into effect; the AI Office opens its doors; and fines and penalties come into effect. This is also the deadline for EU countries to designate a national authority.

August 2026 – all high-risk requirements will be in force by this date. This is also when “regulatory sandboxes” will be available to allow organisations to test their AI systems in a controlled environment before releasing them to the public.

August 2027 – providers of GPAI systems that were on the market before August 2025 must be fully compliant with the Act by this date.